python - Scrapy模擬登陸遇到404問(wèn)題

問(wèn)題描述

用python模擬登陸一個(gè)網(wǎng)站,一直遇到404問(wèn)題,求指導(dǎo)!

代碼

-- coding: utf-8 --import scrapyfrom scrapy.http import Request, FormRequestfrom scrapy.selector import Selector

class StackSpiderSpider(scrapy.Spider):

name = 'stack_spider'start_urls = [’https://stackoverflow.com/’]headers = { 'host': 'cdn.sstatic.net', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, br', 'Accept-Language': 'en-US,en;q=0.5', 'Connection': 'keep-alive', 'Content-Type':' application/x-www-form-urlencoded; charset=UTF-8', 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:54.0) Gecko/20100101 Firefox/54.0' }#重寫了爬蟲類的方法, 實(shí)現(xiàn)了自定義請(qǐng)求, 運(yùn)行成功后會(huì)調(diào)用callback回調(diào)函數(shù)def start_requests(self) : return [Request('https://stackoverflow.com/users/login', meta = { # ’dont_redirect’: True, # ’handle_httpstatus_list’: [302], ’cookiejar’ : 1}, callback = self.post_login)] #添加了meta#FormRequesetdef post_login(self, response) : # 請(qǐng)求網(wǎng)頁(yè)后返回網(wǎng)頁(yè)中的_xsrf字段的文字, 用于成功提交表單 fkey = Selector(response).xpath(’//input[@name='fkey']/@value’).extract()[0] ssrc = Selector(response).xpath(’//input[@name='ssrc']/@value’).extract()[0] print fkey print ssrc #FormRequeset.from_response是Scrapy提供的一個(gè)函數(shù), 用于post表單 #登陸成功后, 會(huì)調(diào)用after_login回調(diào)函數(shù) return [FormRequest.from_response(response, meta = {# ’dont_redirect’: True,# ’handle_httpstatus_list’: [302],’cookiejar’ : response.meta[’cookiejar’]}, #注意這里cookie的獲取headers = self.headers,formdata = {'fkey':fkey,'ssrc':ssrc,'email':'1045608243@qq.com','password':'12345','oauth_version':'','oauth_server':'','openid_username':'','openid_identifier':''},callback = self.after_login,dont_filter = True)]def after_login(self, response) : filename = '1.html' with open(filename,’wb’) as fp:fp.write(response.body) # print response.body

調(diào)試信息2017-04-18 11:19:23 [scrapy.utils.log] INFO: Scrapy 1.3.3 started (bot: text5)2017-04-18 11:19:23 [scrapy.utils.log] INFO: Overridden settings: {’NEWSPIDER_MODULE’: ’text5.spiders’, ’SPIDER_MODULES’: [’text5.spiders’], ’BOT_NAME’: ’text5’}2017-04-18 11:19:23 [scrapy.middleware] INFO: Enabled extensions:[’scrapy.extensions.logstats.LogStats’, ’scrapy.extensions.telnet.TelnetConsole’, ’scrapy.extensions.corestats.CoreStats’]2017-04-18 11:19:24 [scrapy.middleware] INFO: Enabled downloader middlewares:[’scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware’, ’scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware’, ’scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware’, ’scrapy.downloadermiddlewares.useragent.UserAgentMiddleware’, ’scrapy.downloadermiddlewares.retry.RetryMiddleware’, ’scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware’, ’scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware’, ’scrapy.downloadermiddlewares.redirect.RedirectMiddleware’, ’scrapy.downloadermiddlewares.cookies.CookiesMiddleware’, ’scrapy.downloadermiddlewares.stats.DownloaderStats’]2017-04-18 11:19:24 [scrapy.middleware] INFO: Enabled spider middlewares:[’scrapy.spidermiddlewares.httperror.HttpErrorMiddleware’, ’scrapy.spidermiddlewares.offsite.OffsiteMiddleware’, ’scrapy.spidermiddlewares.referer.RefererMiddleware’, ’scrapy.spidermiddlewares.urllength.UrlLengthMiddleware’, ’scrapy.spidermiddlewares.depth.DepthMiddleware’]2017-04-18 11:19:24 [scrapy.middleware] INFO: Enabled item pipelines:[]2017-04-18 11:19:24 [scrapy.core.engine] INFO: Spider opened2017-04-18 11:19:24 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)2017-04-18 11:19:24 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:60232017-04-18 11:19:24 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://stackoverflow.com/users/login> (referer: None)1145f3f2e28e56c298bc28a1a735254b

2017-04-18 11:19:25 [scrapy.core.engine] DEBUG: Crawled (404) <GET https://stackoverflow.com/search?q=&ssrc=&openid_username=&oauth_server=&oauth_version=&fkey=1145f3f2e28e56c298bc28a1a735254b&password=wanglihong1993&email=1067863906%40qq.com&openid_identifier=> (referer: https://stackoverflow.com/use...2017-04-18 11:19:25 [scrapy.spidermiddlewares.httperror] INFO: Ignoring response <404 https://stackoverflow.com/sea...auth_version=&fkey=1145f3f2e28e56c298bc28a1a735254b&password=wanglihong1993&email=1067863906%40qq.com&openid_identifier=>: HTTP status code is not handled or not allowed2017-04-18 11:19:25 [scrapy.core.engine] INFO: Closing spider (finished)2017-04-18 11:19:25 [scrapy.statscollectors] INFO: Dumping Scrapy stats:{’downloader/request_bytes’: 881, ’downloader/request_count’: 2, ’downloader/request_method_count/GET’: 2, ’downloader/response_bytes’: 12631, ’downloader/response_count’: 2, ’downloader/response_status_count/200’: 1, ’downloader/response_status_count/404’: 1, ’finish_reason’: ’finished’, ’finish_time’: datetime.datetime(2017, 4, 18, 3, 19, 25, 143000), ’log_count/DEBUG’: 3, ’log_count/INFO’: 8, ’request_depth_max’: 1, ’response_received_count’: 2, ’scheduler/dequeued’: 2, ’scheduler/dequeued/memory’: 2, ’scheduler/enqueued’: 2, ’scheduler/enqueued/memory’: 2, ’start_time’: datetime.datetime(2017, 4, 18, 3, 19, 24, 146000)}2017-04-18 11:19:25 [scrapy.core.engine] INFO: Spider closed (finished)

問(wèn)題解答

回答1:老弟,你的密碼泄漏了

相關(guān)文章:

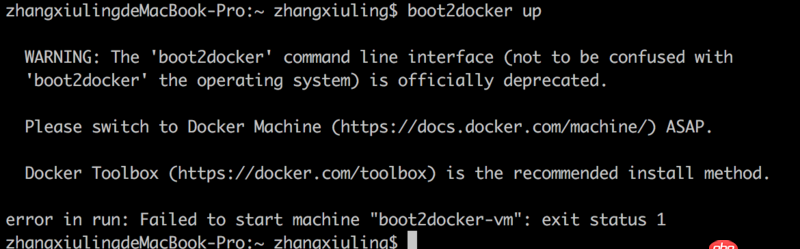

1. 關(guān)docker hub上有些鏡像的tag被標(biāo)記““This image has vulnerabilities””2. boot2docker無(wú)法啟動(dòng)3. docker-compose中volumes的問(wèn)題4. docker安裝后出現(xiàn)Cannot connect to the Docker daemon.5. nignx - docker內(nèi)nginx 80端口被占用6. java - SSH框架中寫分頁(yè)時(shí)service層中不能注入分頁(yè)類7. 關(guān)于docker下的nginx壓力測(cè)試8. dockerfile - 為什么docker容器啟動(dòng)不了?9. node.js - antdesign怎么集合react-redux對(duì)input控件進(jìn)行初始化賦值10. docker容器呢SSH為什么連不通呢?

網(wǎng)公網(wǎng)安備

網(wǎng)公網(wǎng)安備