python - scrapy爬蟲不能循環(huán)運(yùn)行?

問題描述

scrapy只能爬取一個(gè)頁(yè)面上的鏈接,不能持續(xù)運(yùn)行爬完全站,以下是代碼,初學(xué)求指導(dǎo)。

class DbbookSpider(scrapy.Spider): name = 'imufe' allowed_domains = [’http://www.imufe.edu.cn/’] start_urls=(’http://www.imufe.edu.cn/main/dtxw/201704/t20170414_127035.html’) def parse(self, response):item = DoubanbookItem()selector = scrapy.Selector(response)print(selector)books = selector.xpath(’//a/@href’).extract()link=[]for each in books: each=urljoin(response.url,each) link.append(each)for each in link: item[’link’] = each yield itemi = random.randint(0,len(link)-1)nextPage = link[i]yield scrapy.http.Request(nextPage,callback=self.parse)

問題解答

回答1:是不是爬得太快讓封了

相關(guān)文章:

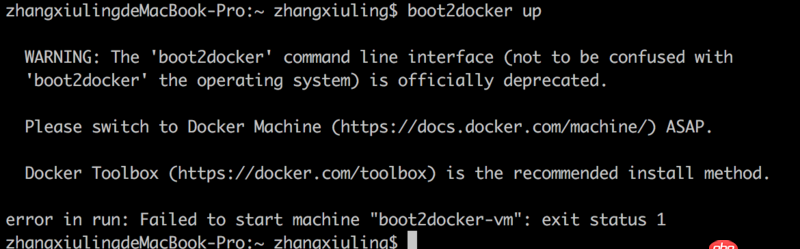

1. 關(guān)docker hub上有些鏡像的tag被標(biāo)記““This image has vulnerabilities””2. docker-compose中volumes的問題3. boot2docker無法啟動(dòng)4. nignx - docker內(nèi)nginx 80端口被占用5. docker安裝后出現(xiàn)Cannot connect to the Docker daemon.6. dockerfile - 為什么docker容器啟動(dòng)不了?7. java - SSH框架中寫分頁(yè)時(shí)service層中不能注入分頁(yè)類8. node.js - antdesign怎么集合react-redux對(duì)input控件進(jìn)行初始化賦值9. 關(guān)于docker下的nginx壓力測(cè)試10. docker容器呢SSH為什么連不通呢?

網(wǎng)公網(wǎng)安備

網(wǎng)公網(wǎng)安備